Infra Writeup 2023

Published on 23 October 2023Getting into the Groove

DownUnderCTF 4.0 ran on the weekend of the 1st - 3rd of September 2023 and it was our biggest event ever! We had over 2000 teams compete over a series of 68 challenges and over 5 million requests later we had our most stable and successful CTFs.

The total cost of running the infrastructure on GCP was $875.79 AUD over the two weeks leading up to and during the event. This was higher than last year as we had a dedicated test environment where we could test all our challenges and infra before deploying to prod. As well as higher demanding resource requirements for our challenges.

As always a HUGE thankyou to Google Cloud for being our DUCTF Infra Sponsor once again.

This year we didn’t change too much in terms of infrastructure as we did not have a major change in how we ran the CTF. In saying this, everything went a lot smoother this year and we are getting into the groove of how to run a secure, stable, and repeatable large-scale CTF.

Overall Setup

We ran this year’s CTF infrastructure with pretty much the same setup as last year. One big ol’ Kubernetes cluster (or k8s if you’re an abreviatorian) which hosted our CTFd instances and our 32 hosted non-isolated challenges and 9 on-demand isolated challenges. Our database for CTFd was a CloudSQL MySQL Database and used Cloud Redis for caching. For ingress to CTFd, we used Cloudflare tunnels to connect the cluster to Cloudflare and for ingress to our challenges we used a L4 load balancer with the cluster as the backend, with Traefik managing the request routing. Our cluster ran with an average of 10 nodes (depending on the current load as it was autoscaled) in a single node pool.

Our on-demand isolated challenge setup was the same as last year as we found that it worked super well and if it ain’t borked don’t bork it. We had a total of 4579 isolated instances spawned throughout the competition and found that the majority of these instances were beginner/easy challenges which is expected.

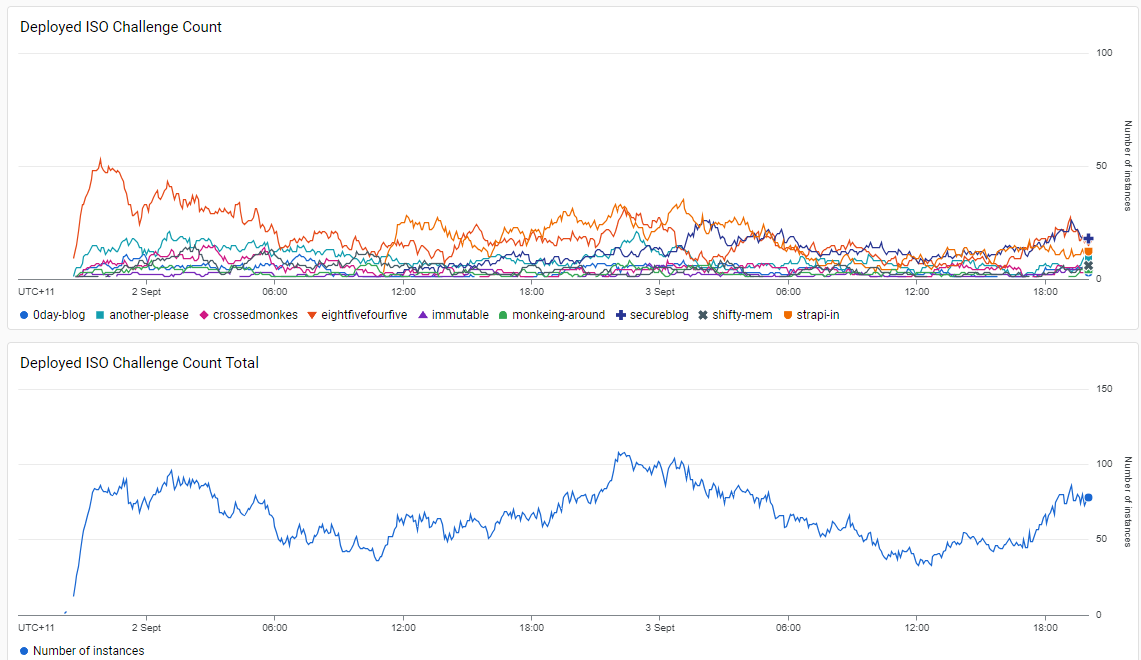

Isolated Challenges Stats

We aren’t going to go into detail about how the isolated challenges work as we already covered this in our previous 2022 Infra Writeup. However, one improvement we would like to have for next year is pre-warmed instances. We have noticed a common complaint about these instances is they can take some time to start up, especially if they have containers that are slow to spin up (database, headless chrome, geth, etc). To improve this for next year we hope to extend our kube-ctf challenge-manager to allow for pre-warmed instances and assign them to teams on demand to allow for almost instant challenge startup times regardless of the startup time.

What was new this year was a dedicated test project that replicated our prod setup to deploy and test our challenges.

No more testing in prod!

We also had an upgraded blockchain infrastructure (more on that later), an embedded prizes scoreboard, our first isolated TCP challenge, and enhanced infrastructure deployment scripts.

Challenge Infra Lifecycle

We are slowly making progress on creating a full CI/CD pipeline for our challenges to automate and QA all challenges as they come in. Our 2023 challenge lifecycle (from an infra point of view) looks like this:

- Challenge is merged into Challenges 2023 repo main branch

- Run script to generate Cloud Build config

- Run script to generate Kube Object config

- Manual updates to generated configs applied for anything challenge specific

- Manual build and push challenge docker image to GCP Artifact Registry.

- Manual apply k8s config to deploy challenge to test cluster

- QA with the author to ensure the challenge is working in test

- Manual apply k8s config to deploy challenge to prod cluster

Although this is better than previous years there is lots of room for improvement here, namely, each step starting with “manual” should be “automated” or 1 click approvals, such as the deployment steps.

This would also help with challenge patches or hotfixes which would speed up the process of getting the updated code deployed as soon as possible, as right now this takes quite a lot of manual work and verification. In a similar vein, we also really want automated testing to be built into our challenges to prove they work and to prove that it is solvable. This year we did require a solve script (or at least a writeup) for each challenge but we did not take the step further of packaging these up into live health checks sidecars for challenges to ensure that they are working as intended. Although this was not a problem this year, we can foresee how this could help the Infra team with misbehaving challenges with alerting, self-healing, and integration with a Challenge Status Dashboard.

CTFd

Once again we used CTFd for our CTF platform for challenge and flag management. For the past few years we have been plagued with performance issues with CTFd at scale, specifically with database connection leaking issues causing frustrating 500 errors for players. However this year we saw a huge improvement in CTFd performance. We upgraded to the latest version of CTFd which was 3.6.0 at the time and throughout the entire competition we only had a handful of 500 requests which was a huge improvement compared to previous years and resulted in a much smoother experience for competitors overall.

The CTFd infra held up during really critical moments through the CTF with a peak QPS of 32.1k at the start of the competition everything held up together well providing a smooth start which is always the scariest time for the Infra team.

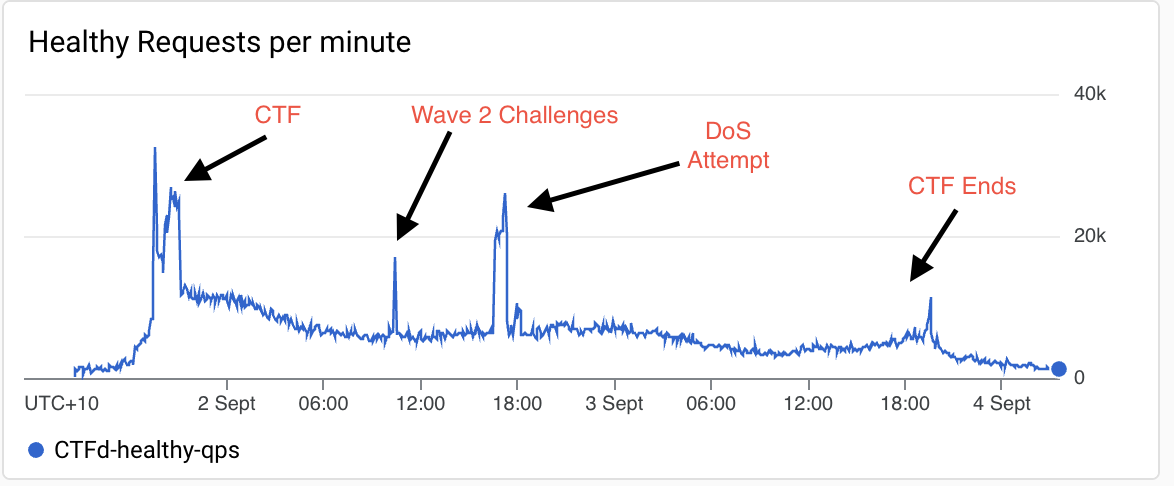

Here is a graph of the QPS (queries per second) for CTFd over the weekend. We can see that the key moments for CTFd are:

- Start of the CTF and wave 1 release

- Wave 2 challenges release

- End of the CTF

CTFd QPS

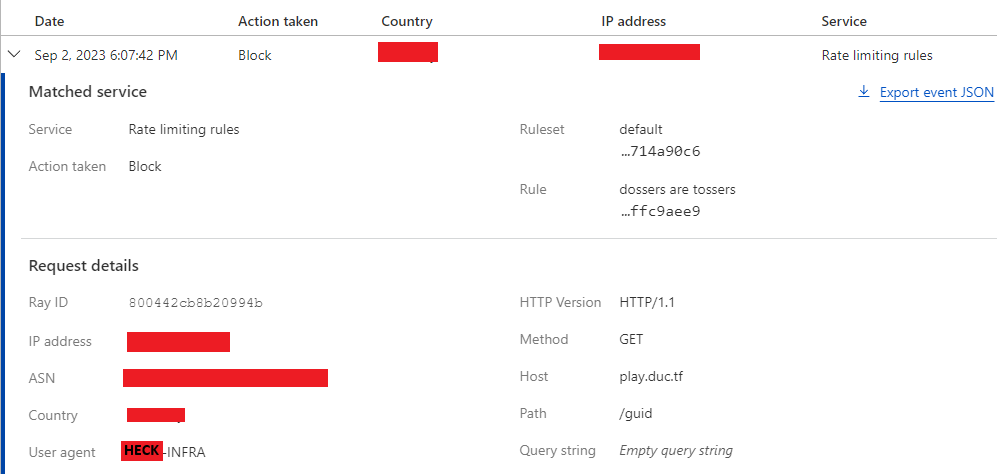

You may notice a spike in QPS in the middle of the event when we hadn’t set up our rate limiting security settings up and we got DoS’d. Thankfully our cluster autoscaled to manage the load so competitors did not get heavily impacted and once we got our rate limits in place, QPS resumed back to normal.

Some players just want to see our infra burn 😭

DoS Block Entry

As we have said in all the previous years’ writeups, the DUCTF team wants to eventually create its own CTF platform that suits much larger player bases. Unfortunately, that is not a priority for us as CTFd is meeting our needs, but we do have concerns that if playerbase jumps an order of magnitude that will not be the case anymore.

Blockchain Infrastructure

This year we released the v2 version of our blockchain challenge infra which is a heavily improved version from v1 which:

- Decoupled challenge code from infra code

- Revamped user interface

- Move verification from offchain to onchain setup contracts

- Moved challenge/blockchain configurations to be in it’s own YAML file

- Essentially make the whole implementation a lot more modular

This resulted in a much better author experience in creating challenges as the author only needed to worry about the challenge contract itself and the condition that they want the smart contract to be in to mark the challenge as complete. Authors no longer need to worry about the implementation of the underlying code to deploy the challenge and can stay in solidity for the entire challenge development process.

The implementation works very similarly to last year’s setup with two containers deployed for each challenge, the geth blockchain node and the API to retrieve the challenge details, reset the challenge, and check whether the challenge is solved. The main difference this year was how we check whether a challenge has been solved. This is now offloaded to a separate smart contract which is defined by the author to check the state of the challenge contract.

Aside from the fact that most players either love or hate blockchain challenges in CTFs, the feedback for the blockchain infrastructure was super positive which we were very happy with. Although for some reason each geth node took its sweet time in starting up, meaning players had to wait a few minutes before they were able to access their isolated challenge instances.

In terms of improvements for next year, at the moment we think for most ethereum based blockchain challenges the infra is working very well. However, if future challenges require some more novel setups such as simulating other wallet transactions, complex multi-contract deployment, or even different blockchains entirely we will need to work to extend our blockchain infra to support that.

Infra Documentation

So apparently the Infra team does not have an amazing long-term memory and every year when we sit down to deploy everything all over again, we forget what we had and how everything worked.

Infra team reading infra scripts

Which is becoming a big problem as our setup gets more complex. Now ideally, our automation and scripts should make this as easy as possible, but every year we need to tweak and change things and having to relearn everything without proper documentation to reference is slowing us down and wastes time relearning things rather than upgrading our existing setup.

Not to mention our bus factor is pretty low at the moment, with a lot of the knowledge of how everything works sitting in the heads of a couple of organisers. As the Infra team knows very well, we need to avoid single-points-of-failure and by distilling this knowledge into docs that anyone can learn we can ensure that DUCTF can continue.

So it’s official, we have decided we need to write proper documentation on how to spin up our GCP resources, deploy our stack, and just general descriptions of what all our scripts do. We hope to eventually share this publicly so that anyone wanting to run a CTF can learn how the DUCTF crew does it and get them up to speed quickly. At the time of writing. our “documentation” is currently a single primitive Google Doc. We look to expand this and move it to a better platform to eventually share with the rest of the CTF community.

Miscellaneous

Challenge Status API

We also worked on a Challenge Status API which unfortunately we did not get ready for in time for the event. This would be an API that would track the health status of challenges and notify organisers (and eventually competitors) of any ongoing issues with challenges. We want to integrate this API with the challenge health checks to automate updates to a challenge’s status if the healthcheck reported a challenge was not working as intended.

We hope to have this ready for next year!

Isolated TCP Challenge

We had our first isolated challenge which was not a web-based challenge and ran over TCP. This was initially a tricky problem as usually our TCP based challenges have a unique port assigned to them and then isolation is provided through nsjail.

We couldn’t dynamically assign a unique port to each isolated instance as this would require adding a lot more logic to our isolated challenge manager and we could potentially run out of ports. We solved this by requiring TLS to connect to this challenge (openssl rather than just nc) then we could use Traefik’s IngressRouteTCP to match on the SNI to route to the appropriate instance.

.dev TLD HTTP(Ye)s

The domain that we used to host our challenges this year was *2023.ductf.dev. One challenge required that it would be served by HTTP and not HTTPS. This worked fine in our test setup and our test domain. However, once we deployed to prod we could not for the life of us understand why the browser would continuously try to upgrade to HTTPs while cURL wouldn’t and would work fine.

After hours and hours of debugging our cluster and networking setups to ensure we weren’t sending any HSTS upgrade requests, we figured out that this turns out this is a ‘feature’ of the .dev TLD. This domain is on the HSTS preload list which means that any browsers which use this list will always try to upgrade to HTTPs for this TLD. cURL does not use this list which is why it did not try and upgrade. To solve this we hosted the problematic challenge on a domain that wasn’t on the HSTS preload list.

Wrapup

The DUCTF Infra team has gotten into the groove of how to get this whole event running with minimal disruptions but still sees lots of areas to get even better. We are always looking at how to make our setup better every year and hope by open-sourcing the entire setup we can work with the rest of the CTF community to build even better CTFs with more capabilities.

The whole DUCTF team did amazing this year and we are super happy to have hosted another fun event for our Australian students and players all around the world and hope to bring it back again for DUCTF 5.0 in 2024.

–

Infra team monitoring da graphs