Infra Writeup 2022

Published on 15 October 2022

DownUnderCTF had its third annual CTF in 2022, with a total of almost 4200 players this year and nearly 2000 teams. The Infrastructure team was hard at work again to make this the smoothest and coolest CTF experience for both our challenge authors and our players downunder 😉.

Let’s again revisit the infrastructure that powered DownUnderCTF for the third year running.

TL;DR

The good 👍:

- Player Isolated Challenges Part 2

- Blockchain Challenge infrastructure worked

- Zero downtime on CTFd during the event

- Infrastructure-as-a-Code is getting there

The bad 👎:

- Inadequate DDoS Protection

- CTFd giving us increasing pains

- Latency issues for international players

A huge thankyou to our Infrastructure Sponsor Google Cloud for the third year in a row. We could not run this without your help.

Total cost of running the infrastructure on Google Cloud for the event came to $378.42 AUD 💰(before discounts and credits)

2021 Recap

In last year’s writeup, we had a wishlist of items that we wanted to solve for this year’s competition. We weren’t able to get to all the items but we were able to make some good progress.

- Create an Automated CI/CD Pipeline for challenges and CTFd (no more testing in Prod) ✔️

- Have a test instance of CTFd running to verify any changes we make before we push them to prod ✔️

- Increase DDOS and Dirbuster protection, we had some players scanning the infrastructure which were promptly banned, but we would like to automate this. ❌

- Have automated solve script health checks on each challenge which runs periodically so we can verify each challenge is working and alert us if it isn’t ❌

Isolated Challenges Part 2

Last year, we ran a pretty successful pilot of the isolated challenge infrastructure on a single web challenge. This year, we managed to run a whopping🍔7 challenges on this platform, and hopefully will be able to support this functionality a lot more. Also the code for all of this is open source! Check out https://github.com/DownUnderCTF/kube-ctf for more details on how to use it.

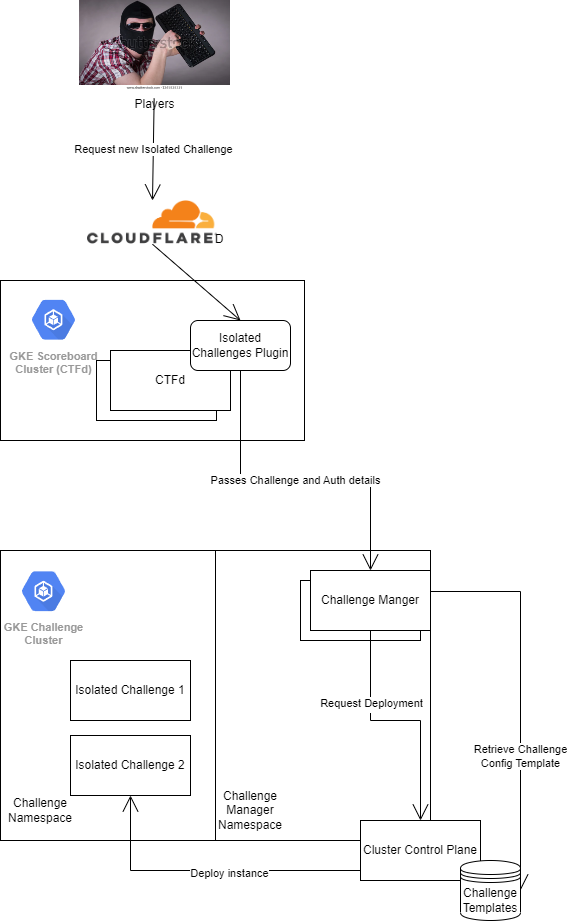

The way it works is that a player can request an instance of a challenge from the scoreboard, and an instance which can only be accessed by the team will be automatically provisioned (technically not true since anyone that finds another team’s hostname will be able to access the challenge). How it works is a little more interesting.

- Player requests a challenge to be deployed

- challenge-manager requests the template from the Kubernetes Control Plane

- The template is populated with some values, and then applied to the cluster

- Kubernetes automation takes over and creates the deployment, service and hostname-based ingress.

Isolated Challenges Setup Diagram 2022

Of course we can’t really rely on players to shut down their challenge instance when they are finished with it, so each challenge resource is set to expire after a certain period of time which the player can extend. Once the resource is marked as expired💀, kube-janitor will remove the resources from the control plane which will then deprovision the instance.

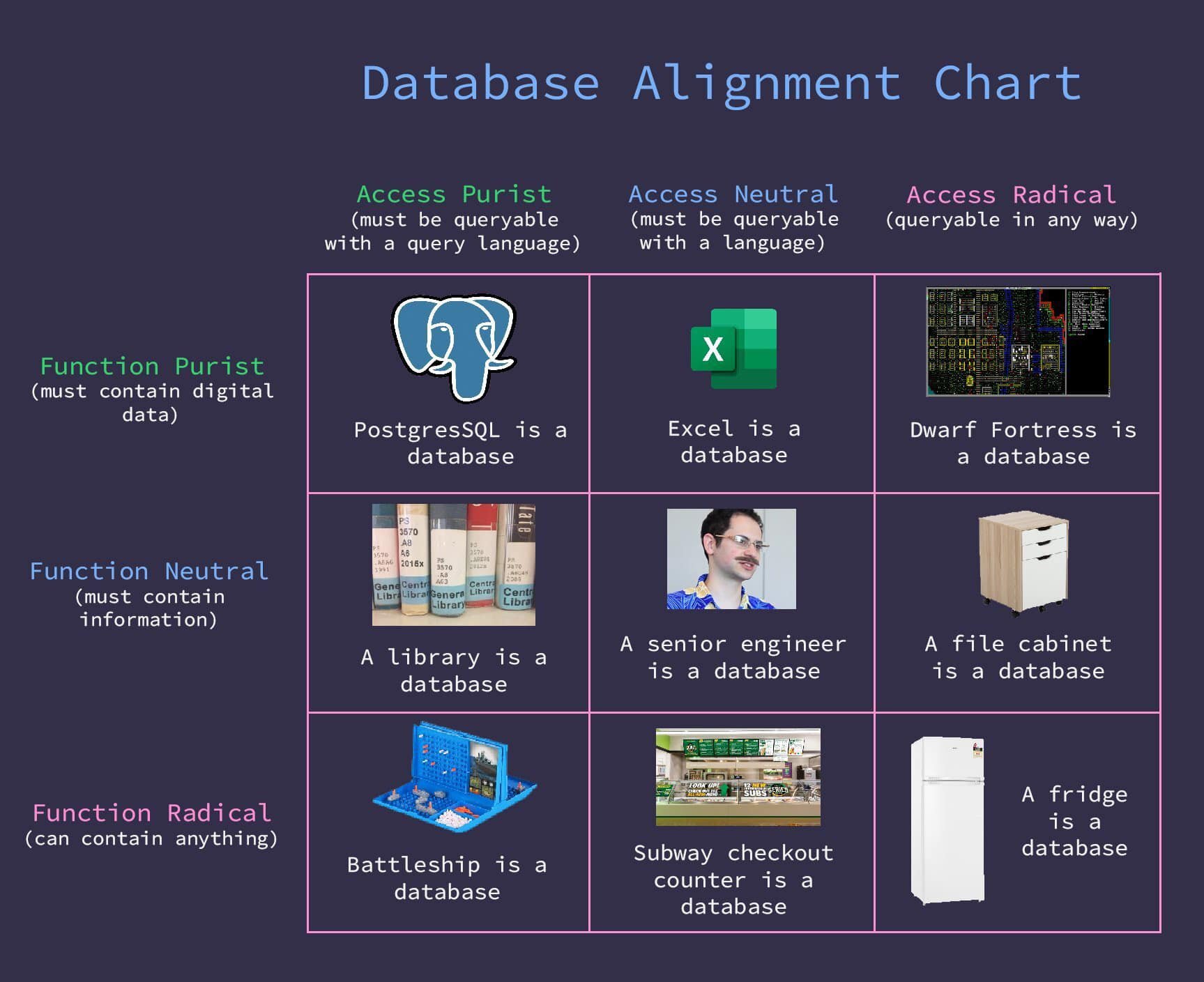

An improvement for the isolated infrastructure this year was that it no longer depended on any external services other than the Kubernetes control plane. We figured that Kubernetes itself is basically a database which means that it can be used to store the challenge templates through the magic of Custom Resource Definitions. This meant that it was much easier to deploy the infrastructure, and updating the configuration of a challenge is only a kubectl command away. The code is available above if you want to check out how this is implemented. Using helm to set up the supporting services was also much nicer than applying random config files all around the place as it helped us greatly with managing the versioning of the infrastructure code.

Anything's a database if you're brave enough

There was a minor incident with the isolated infrastructure which caused all new deployments for blockchain isolated challenges to fail between 2022-09-23 20:30 to 21:30 UTC. This was due to the janitor doing an oopsie and cleaning up a shared secret which was used to provide the sealing key to the pod. Once the secret was restored and the rule corrected, the problem was no more.

Blockchain Challenge Infrastructure🧱⛓️

Another requirement for this year was to build the infrastructure to allow for Blockchain/Smart Contract based challenges. There are plenty of CTFs out there which support blockchain challenges and all have different approaches, but we landed on one which used geth as a base blockchain and an API to manage the challenge. This was inspired by ChainFlags approach.

Due to the nature of these blockchain challenges, each of these challenges would need to be isolated from each other. If we were to have a singular chain for each of the challenges (or even all of the challenges), it would allow players to look at other players’ transactions or potentially game the system somehow to cheat the challenges. An option here is to filter out particular RPC calls to geth to not allow players to transactions in the chain (this is what ChainFlag does). However we thought, we have the isolated challenge setup (see above). Why don’t we just use that?

So we did!

But how?

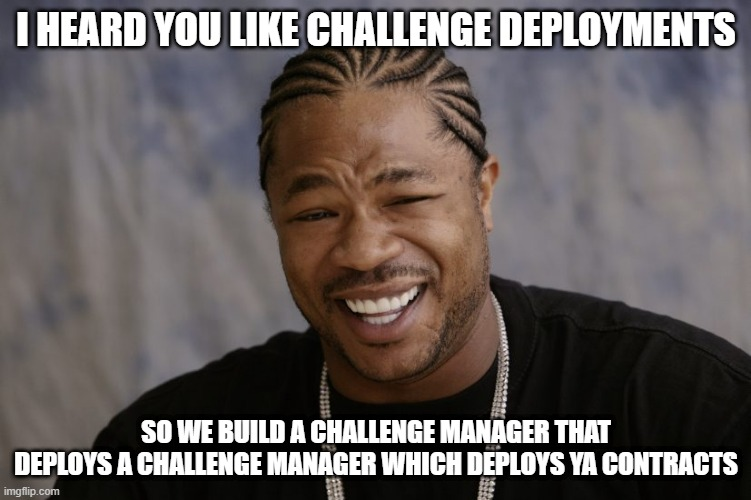

Our Isolated Challenge setup would deploy 2 containers for each BlockChain challenge that was deployed. The Geth container and the API container. Geth being the base blockchain, and the API which would deploy the challenge contracts to the chain.

So many layers

GETH

Geth is our base blockchain container that uses a Proof-of-Authority consensus mechanism to create blocks (don’t need to be wasting resources mining blocks. We 💖🌳)

Our Geth setup is pretty simple and is based on the ethereum/client-go:stable image. When building our container we just need 2 things:

- The sealer account creds

- The genesis block config

The sealer account is the account that has the Authority to seal transactions and to create blocks. This is just a randomly generated account that is designated in the genesis block config.

The genesis block config provides the base config required for this chain. This would prefund our deployer address, set the block time and other configuration parameters.

API

The API we developed provided a simple HTTP interface to manage the challenge that the player had deployed. On startup the service checks which challenge is being deployed via environment variable and deploys that challenge, with any custom work defined by the challenge. We then generate and fund a random wallet and give this to the player.

The API which was written in TypeScript using fastify as a framework provided 3 endpoints

- /challenge

- Returns the current challenge contract addresses, as well as the player address and private key

- /challenge/solve

- Would check to see if the challenge is complete or not. Essentially check the state of the chain and return the flag if it is complete.

- /challenge/reset

- Defunds the players funds and deploys a new instance of the challenge on the same chain and provides a new player wallet.

And that’s pretty much it. A simple setup which worked really well during the CTF! We have open sourced our infra setup at https://github.com/DownUnderCTF/eth-challenge-infra. There is still a lot of work we want to do to make the whole experience better for next year, but we were really happy this setup worked without any hiccups on the first go!

Cloud WHAT?

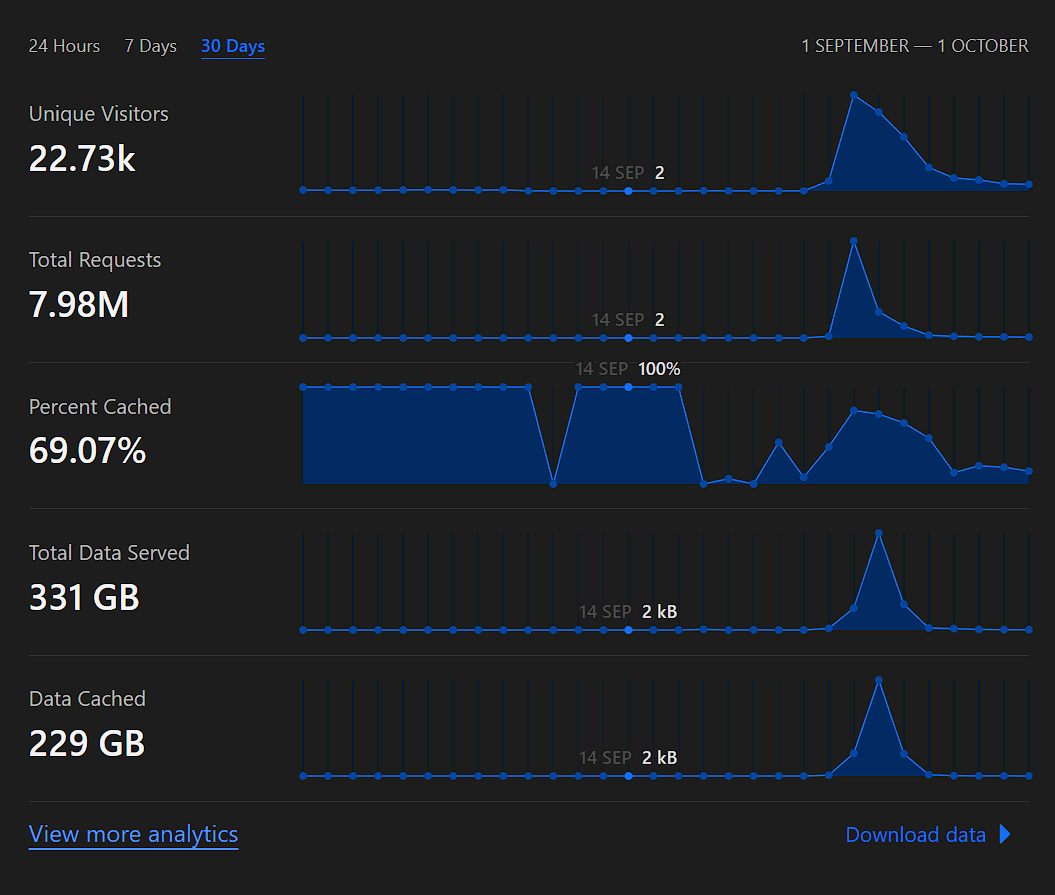

This year is the first year that we used Cloudflare as a CDN rather than only DNS hosting. We heard that Cloudflare provided good DDOS protection and wanted to see if it would work wonders for our setup. It would also potentially save us a lot of bandwidth to our origin, which Google charges a fortune for. We opted to use the CDN features only for the scoreboard, as a WAF in front of our challenges would probably block malicious requests to the challenges which is what we don’t want. Also Cloudflare doesn’t support proxying TCP challenges (at least on the free plan).

That's a lot of data!

Setup

Instead of setting up a Google Cloud Load Balancer for the scoreboard, we used cloudflared to connect the scoreboard to the internet. The way it works is that an outbound connection is created from our hosts to Cloudflare’s infrastructure, instead of the other way around. The benefits of this setup is that we didn’t have to expose any ports to the internet, meaning that players wouldn’t be able to find the load balancer’s IP address and launch a direct DDOS attack on the hosts. We were also able to save 2.5c an hour as we didn’t have to provision a load balancer either.

The cloudflared application is deployed through Kubernetes as a DaemonSet, in order to deploy a proxy instance on each host. You can have a look at this gist to see how it is configured.

I’m Under Attack

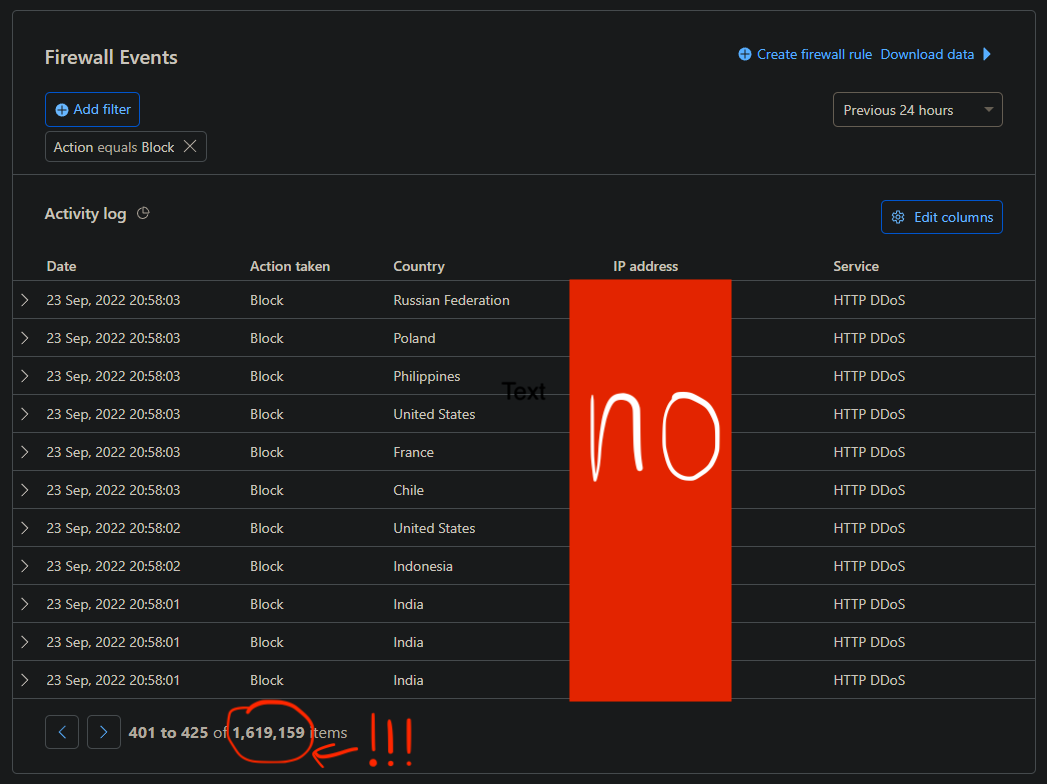

Who doesn’t love a fresh cup of 1.6mil DDoS requests in right as the CTF starts?

From hosting last year’s CTF, DDOS protection and rate-limiting was a must for our infrastructure, as we observed a lot of port scanning and dirbusting from scanning the server logs. Additionally, we noticed that CTFd was not great at handling sudden load spikes, as it would return a flurry of 500s while Kubernetes scales up the replica count. Also let’s not get to the database connection leakage problem which we still haven’t figured out how to solve.

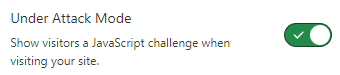

Just as the CTF started, we were hit with a huge dirbusting attempt which pretty much brought CTFd to its knees. The infra team was actively monitoring the attack and toggling the “I’m Under Attack” mode throughout the competition whenever we observed an anomalous load spike. The reason it wasn’t left on the whole time is that the interstitial page would block access to our bots due to them not having Javascript enabled (we eventually figured a way around this), and also would impact the player experience as it forces a captcha regularly which breaks the UI.

oooweeee we're in danger

While the free plan was able to absorb the DDOS attack that we saw in the initial hours of the CTF, it also added significant latency to the scoreboard which our players noted in the feedback. For example, traffic from Optus and Telstra users were routed through Cloudflare’s HKG and NRT POPs instead of SYD which adds at least 200ms of latency, and is completely unnecessary since the scoreboard itself is hosted in SYD (see https://blog.cloudflare.com/the-relative-cost-of-bandwidth-around-the-world for an explanation of why they do this, TLDR Australian internet is expensive and not just for consumers).

However we do thank them for being able to provide such a great service at no cost to so many websites across the globe including us. We will most likely revert to using Google’s load balancer to front the scoreboard next year but we’ll need to figure out how to better insulate our infrastructure from attacks.

If anyone from Cloudflare is reading this, we’d be very grateful if you could sponsor us.

Miscellaneous Infra Things

Participation Certificate Generation

Similar to last year, we wanted to give our players something to take away from the competition regardless of where in the world you were playing from so we generated certificates for our players.

We did this by creating a CTFd plugin which works through a logged in user navigating to an endpoint. We then gather all the required information for the certificate to be generated for that player (username, team name, points, etc) shove it in a JWT and send it over to a serverless Cloud Function which generates the PDF on the fly using pdf-lib and a pdf template designed in Canva.

Large File Hosting

We only had 1 big file that needed to be hosted this year for some of the DFIR challenges. Since we were already using CloudFlare we wanted to try out their R2 buckets. This service is object storage in the Cloud which has 0 egress fees! Egress fees for serving large objects for CTFs can be the largest expense of the infra.

For example if we used Cloud Storage which has an egress fee of 0.19c / GB from Australia -> Australia traffic, we estimated that this would cost us $102.60. This is also a conservative estimate as a lot of our players were not in Australia and so this would be a lot more for cross-continent egress.

We set up a CloudFlare worker using this template to pass through the data since R2 at the time did not support any public ACLs for their buckets (this has since been updated since they have gone GA). This worked flawlessly and we had no issues and no expenses at the end of the event.

Santa’s Wish List 🎅

While we have gained a lot of experience over the last 2 years, the infra is still not perfect and never will be :). Alas we have some notes for what we can do better next time around.

- Move off CTFd!!👋 We’ve been thinking about this for a while as the platform is outgrowing our scale, but currently there is no viable alternative. Which means that we’ll be looking at writing our own one!

- Work on better DDOS protection for the scoreboard. This was our first year using Cloudflare but we will most likely revert to using Google’s load balancer and CDN to front our scoreboard next year.

- Better automation for challenge preparation and deployment. We’ve got our netcat-based challenges down to an art with automatic generation of deployment configs but the kubernetes config for web challenges still needs to be manually reviewed for the most part. Also manually crafting yaml files is no fun task.

- Multi-region deployments🌍🌎🌏 to support our ever-increasing international player base. For some of our challenges that require hundreds of round-trips, a player from New York isn’t going to have as great of a time as a player from Sydney.

- Have automated solve script health checks on each challenge which runs periodically so we can verify each challenge is working and alert us if it isn’t. Carried over from 2021.

- Decouple the blockchain infra from specific challenge implementation. We want to eventually get the blockchain infra to be a standalone repo which can take a config file and contracts to deploy a challenge as opposed to the custom code we have now.

Credits

From setting up and testing the scoreboard and challenges, to monitoring and warding off the DDOS attacks, it wouldn’t have been as smooth without the contributions of everyone on the infra team! In no particular order:

- Sam Calamos - samcalamos.me

- Tom Nguyen - tomn.me

- Jordan Bertasso - d3lta.dev

- Emily Trau - @emilyposting_

Also thanks again to the rest of the organising team for making the event a success 3 years in a row, what good would the infra be if we didn’t have any challenges, players or prizes.

Thanks for making it to the end, here is a monke gif as your reward.

Behind the scenes of infra team